:max_bytes(150000):strip_icc()/Craigslist2-0d6f8e533c4f42b98021add032b0af39.jpg)

- #CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY HOW TO#

- #CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY DRIVER#

- #CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY CODE#

Since it's based on Beautiful Soup, there's a significant overlap in the drawbacks of both these libraries. MechanicalSoup's request handling is magnificent as it can automatically handle redirects and follow links on a page, saving you the effort of manually coding a section to do that. This is especially helpful when you need to enter something in a field (a search bar, for instance) to get to the page you want to scrape. You create a browser session using MechanicalSoup and when the page is downloaded, you use Beautiful Soup's methods like find() and find_all() to extract data from the HTML document.Īnother impressive feature of MechanicalSoup is that it lets you fill out forms using a script. While the names are similar, MechanicalSoup's syntax and workflow are extremely different. You can't scrape JavaScript-driven websites with Scrapy out of the box, but you can use middlewares like scrapy-selenium, scrapy-splash, and scrapy-scrapingbee to implement that functionality into your project.įinally, when you're done extracting the data, you can export it in various file formats CSV, JSON, and XML, to name a few. It sends and processes requests asynchronously, and this is what sets it apart from other web scraping tools.Īpart from the basic features, you also get support for middlewares, which is a framework of hooks that injects additional functionality to the default Scrapy mechanism. It comes with selectors that let you select data from an HTML document using XPath or CSS elements.Īn added advantage is the speed at which Scrapy sends requests and extracts the data. Scrapy is the most efficient web scraping framework on this list, in terms of speed, efficiency, and features. But don't let that complexity intimidate you. Allows the scrapy framework to output items into a number ofĬonvenient formats (csv, json, etc.Unlike Beautiful Soup, the true power of Scrapy is its sophisticated mechanism.

#CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY HOW TO#

I decided against this because Selenium needs to know how to find the menu using CSS selector, class name, etc.

#CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY DRIVER#

Normally such links would not be followed and scraped for further urls, however if a single-page AngularJS-based site with no sitemap is crawled, and the menu links are in elements, the pages will not be discovered unless the ng-app is crawled as well to extract the destinations of the menu items.Ī possible workaround to parsing the js files would be to use Selenium Web Driver to automate the crawl.

#CRAIGSLIST EMAIL ADDRESS EXTRACTOR SCRAPY CODE#

Those menu items execute javascript code that loads pages but the urls are in the. There are clickable menu items that are not contained in tags The crawl_js parameter is needed only when a "perfect storm" of conditions exist:

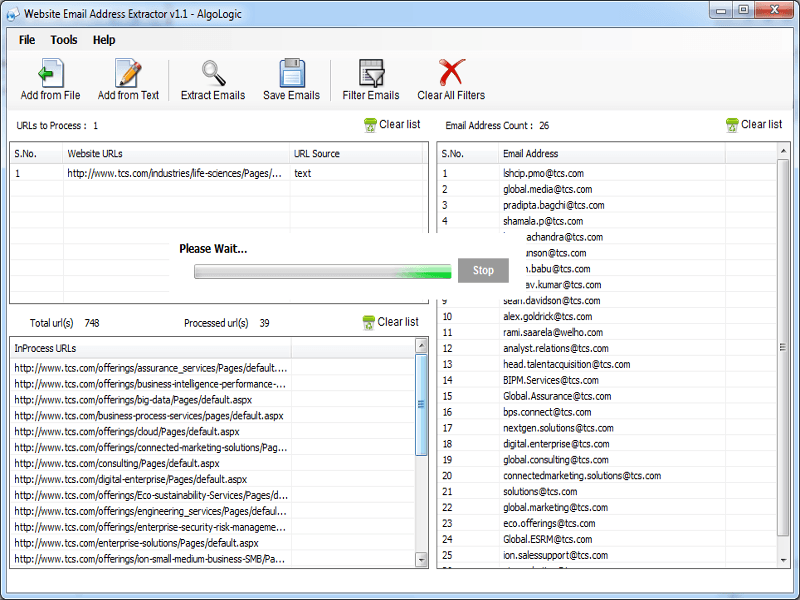

crawl_js - optional boolean, whether or not to follow links to javascript files and also search them for urls.subdomain_exclusions - optional list of subdomains to exclude from the crawl.Two optional arguments add further tuning capability: The project consists of a single spider ThoroughSpider which takes 'domain' as an argument and begins crawling there. I had never used it before so this is probably not the most elegant implementation of a scrapy-based email scraper (say that three times fast!). I implemented this using the popular python web crawling framework scrapy. A general-purpose utility written in Python (v3.0+) for crawling websites to extract email addresses.

0 kommentar(er)

0 kommentar(er)